Code Review Is Dead

Previously the bottleneck was writing code. It was slow and expensive. Today, the bottleneck is everything around it. AI can write code faster than any human—faster, even, than a human can read. Production is no longer the bottleneck; validation is. How do you know it actually works as it should?

The old process is broken

Traditional code review made sense when code was expensive. A senior developer would read through a pull request line by line, look for bugs, suggest improvements, approve or reject. This worked because humans wrote the code at human speed. You could keep up.

That math just isn't mathing anymore.

When AI can generate hundreds of lines in minutes, no human can review it all. Not thoroughly. Not safely. You'll skim. You'll inevitably miss things. You'll rubber-stamp PRs because you're tired and the diff is 47 files long.

The new process: oversight, not inspection

The shift today is from writing and reviewing code to overseeing its production and validation. You're not the coder anymore. You're the supervisor. In practice, this means four things change:

AI reviews the code, not you. Use agents to analyze pull requests, write descriptions, flag structural issues, and red-team the implementation. The AI reads every line because it can. You read the AI's report.

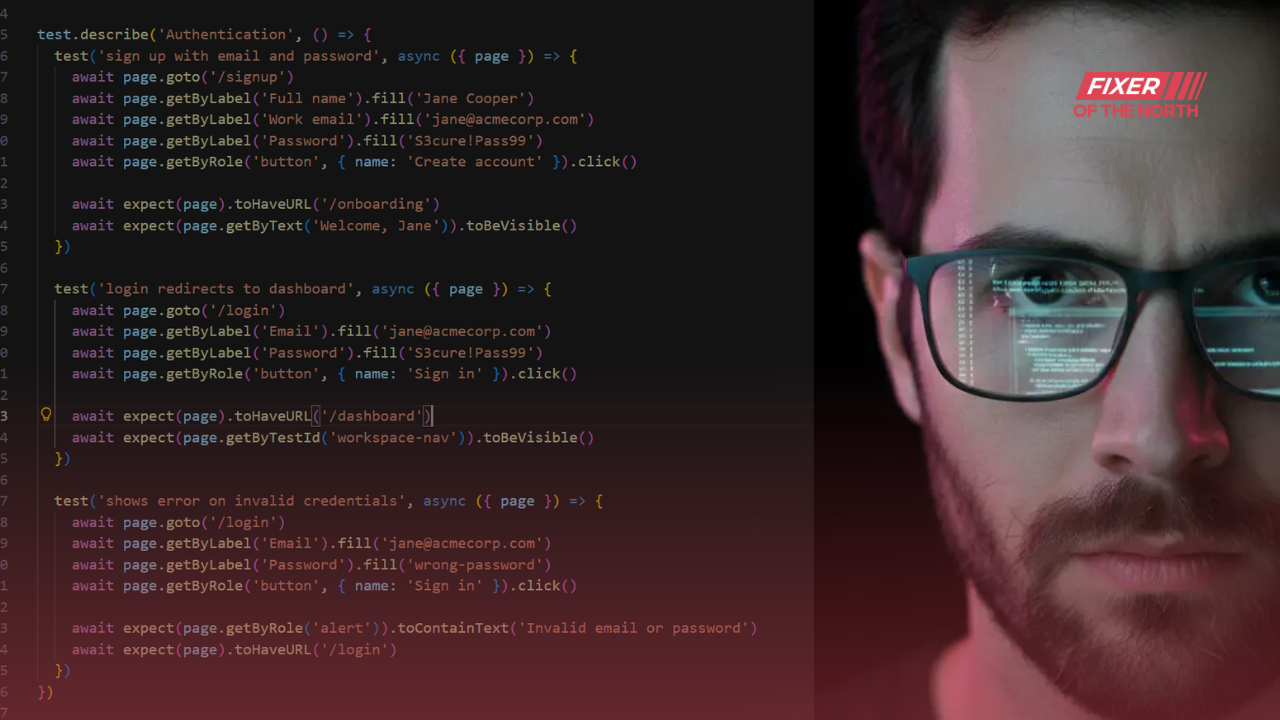

Tests become mandatory, not optional. Writing tests with AI tools is cheap now. There's no excuse. If you can't review every line yourself, you need automated validation that can test all of it. Tests are your safety net when the code moves faster than your attention.

Your job is reviewing the validation. Instead of asking "is this code correct?" you ask "are we testing the right things?" You review the test coverage. You check if the AI reviewer added all tests that matter. You verify the validation process is sound.

Review the instructions themselves. When AI generates code, it follows instructions. If something goes wrong, the problem often lives there. Check what instructions the AI was actually working from. Read the repo-level prompts. Sometimes the code is fine but the instructions are broken. Reviewing the output without reviewing the input that shaped it only gets you halfway.

What the work actually looks like

You're reading reports from code review agents. Scanning test suites to make sure they cover edge cases. Asking "did we test the failure modes?" instead of "did we handle the null check on line 247?" You're using agents to attack your own code. Red-teaming. Finding holes before production does. Ultimately, constantly reviewing and updating the repository instructions based on whatever you find.

And to top it all, this requires additional trust in systems you didn't build and can't fully verify line by line. You're trusting the generated test suite. Trusting the non-deterministic review agent. Trusting that your oversight of the validation is enough.

But the alternative is pretending you can review AI-generated code at human speed. That's not diligence. That's theater. Performance art just because the process says there has to be one.

The new skill

The valuable skill isn't writing code. Pretty soon it wont be reviewing it either. It's designing validation systems for AI generated code. Knowing what to test. Knowing what questions to ask the review agent. Knowing when the green checkmarks are lying to you. And knowing how to correct for errors.

Code production is solved. Validation is the new bottleneck. Get good at that.